Science isn’t Science without Scientists

In the 10 October 2014 issue of Science, the editors ran a policy article titled “Amplify scientific discovery with artificial intelligence.” The abstract reads:

Technological innovations are penetrating all areas of science, making predominantly human activities a principal bottleneck in scientific progress while also making scientific advancement more subject to error and harder to reproduce. This is an area where a new generation of artificial intelligence (AI) systems can radically transform the practice of scientific discovery. Such systems are showing an increasing ability to automate scientific data analysis and discovery processes, can search systematically and correctly through hypothesis spaces to ensure best results, can autonomously discover complex patterns in data, and can reliably apply small-scale scientific processes consistently and transparently so that they can be easily reproduced. We discuss these advances and the steps that could help promote their development and deployment.

By printing such policy papers, the editors are exchanging one scientific goal (the search for truth) with another (economic growth). This is not the advocacy of scientific progress, but rather the advocacy of science as a means to economic growth on behalf of organized industry — at the expense of individual scientists who will be replaced by “the artificially intelligent assistant.” The burden of proof is on the authors and the editors to show why increasing automation in science will not result in job losses over time.

Growth in Practice

There are good reasons to question the merit of growth as a policy objective.

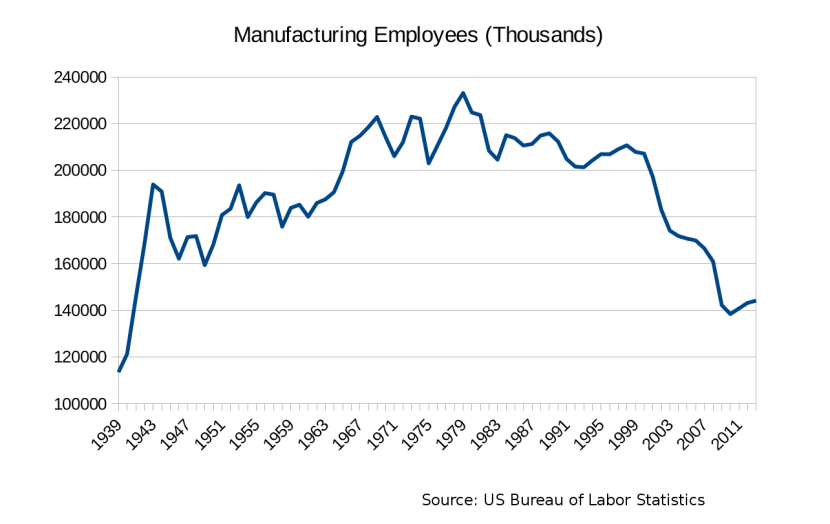

Automation typically means increasing economic growth while decreasing the number of quality employment opportunities: for example, in terms of the raw number of occupations filled, US manufacturing employment is at at levels not seen since just before we entered World War II.

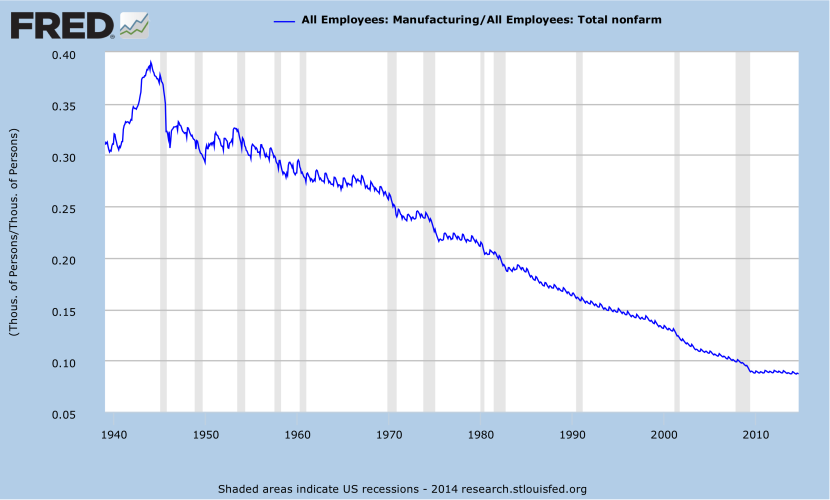

The availability of manufacturing jobs relative to overall job opportunities has also been in steady decline since WWII:

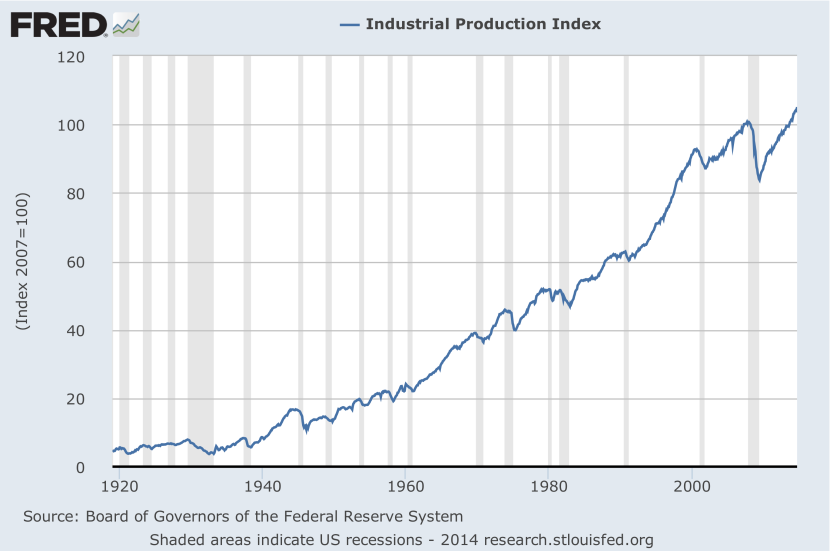

Many of these lost jobs were high-paying union jobs with benefits. Despite these job losses, the value of US manufacturing output has increased over 800% since WWII:

Fewer people yield far more output because of automation.

It is difficult to maintain that increased productivity — though it costs jobs — nevertheless yields a net benefit to society. The value created by increased productivity has not been shared uniformly across the economy: incomes for typical households have been stagnant since 1965. In 1965, median US household income stood at $6,900. Adjusted for inflation this amount equals $50,292 in 2012 dollars. The median household income in 2012 was $51,371, an increase of about 2%. Adjusted for inflation, GDP increased over 360% between 1965 and 2012. Most growth since 1965 has therefore been growth in inequality. Growth means fewer jobs. Increased automation has not meant abundance for everybody, but it has meant a scarcity of high-quality jobs for many.

These macro-economic growth imperatives can lead to additional adverse consequences beyond job loss or wage stagnation. For example, farm prices in the late 1920’s collapsed due to over-production following the introduction of technologies like the tractor and the combine; the problems of industrial agriculture precipitated a broad price control subsidy regime which persists to this day — at taxpayer expense — increasing the cost, complexity and fragility of the food system. As complexity increases, costs increase disproportionately, because increased complexity needs to address the problems created by its own existence. This is a special case of diminishing returns, and can appear in the form of increased managerial overhead.

Increasing automation in science by using AI is likely to have similar unintended consequences.

Internal Inconsistencies

Furthermore, the article published in Science appears to contain a number of internal contradictions which the editors should have caught. If, as the first sentence asserts, “technological innovations are … making scientific advancement more subject to error and harder to reproduce,” then it is hard to see why increasing the role of technologies like artificial intelligence is going to help improve things. If, as the second paragraph states, “cognitive mechanisms involved in scientific discovery are a special case of general human capabilities for problem solving,” then it is hard to see why it follows that the role of humans in “scientific discovery” should be considered a “bottleneck” and therefore diminished.

Specifically, given the large number of scientific null results that are neither written up nor published, it would appear that the authors are making an untenable argument: that artificial intelligence represents an improvement over the unknown efficacy of human research. To make such a case, the authors would need to show that artificial intelligence can learn from a negative or null result as well as a human (which cannot be validated since humans are unwilling to publish such null results). The authors are, in effect, assuming that only positive results are relevant to scientific discovery. By contrast, the Michelson-Morley experiment is perhaps one of the most celebrated negative results of the modern era, paving the way for General Relativity and such technologies as atomic clocks and GPS.

Relevance to the Meaning of Science

In an era where science is no longer a field of human endeavor, but is increasingly automated, Federal science funding can only be an industry subsidy. Any remaining scientists would be become merely means to organized industry’s macro-economic ends. This calls into question the very meaning of science itself as a human endeavor, but offers no solutions.

To ask a similar question: consumers may purchase and enjoy literature written by algorithms, but should they? Is it still properly literature if it doesn’t reflect the human condition? Ought we not educate young people to enjoy the experience of exploring the human condition and our place in the Kosmos? Or has the promise of secular humanism run its course, and the classical University education given way to an expensive variety of technical training, by means of which one takes on debt to procure a new automobile every few years? Is that now the meaning of life? Is this a new age of superstition and barbarism, where technology “advances” according to privileged seers who read the entrails of data crunched by the inscrutable ghost in the machine, waiting for a sign from the superior intelligence to come shining down from the clouds? So much for the love of learning.

These are not simply rhetorical questions, for if automation makes science as we know it obsolete, then there is — if nothing else — a substantive policy debate to be had where the federal science budget is concerned.