Jaron Lanier‘s discussion of privacy and technology in the November 2013 issue of Scientific American is quite nuanced and insightful. Unfortunately, his conclusion — that privacy needs to be monetized — misses the more fundamental problem: his solution is designed to preserve economic growth, yet it is this type of growth that promotes the technological developments used primarily for public and private surveillance.

Specifically, it is the increases in complexity and specialization associated with technological growth that make its consequences for privacy difficult to grapple with socially. It’s a cliche to point out that technology changes faster than culture can adapt to those changes; what’s less common is a discussion of the financial imperatives driving this technological change. This change is described as though it were necessary — as though technology were a force of nature — and the terminology used to describe this change carries implicit positive biases. This change, for example, is often described as “progress” — a benign succession of “revolutions” were constant improvements constantly amputate the past. Calling this change “progress” implies that these changes are always good — though the human history of such change is remarkably short, and the climactic and environmental changes associated with “progress” have proved sudden and dramatic. Economic growth and rapid technological “progress” may yet prove to be thoroughly disastrous.

If a new technology — like online targeted advertising or deep packet inspection — materially or emotionally complicates our efforts to satisfy more basic social and personal needs, perhaps it is more rational to simply dispense with that new technology, than to cope by adding a new layer of complexity.

A more sustainable solution is probably closer to what John Kenneth Galbraith described in a thought experiment from 1967. Writing in The New Industrial State, noted economist and former Kennedy policy advisor Gabraith pointed out:

“It would, prima facie, be plausible to set a limit on the national product that a nation requires. The test of national achievement would then be how rapidly it could reduce the number of hours of toil that are needed to meet this requirement.”

Monetizing privacy is a solution designed to support continued economic growth by commoditizing human activity within in a market structure, when, in fact, the widespread value attached to growth is at once arbitrary and the source of the problem. For most of human history, not much changed: the present value placed on growth is an anomaly, and may well be an error. There is certainly little evidence to demonstrate its long-term desirability.

Growth imposes heavy burdens, rather than ease them with conveniences. The 40 hour work week is modest compared to the 60 or 80 hour work week in the 1800’s, but is still an abomination in the history of humankind. Juliet B. Schor, who taught economics at Harvard for 17 years, points out that medieval peasants only worked 1/3 of the year. Marshall Sahlins, who teaches anthropology at University of Chicago, estimates that the average hunter-gatherer works about 15 hours per week.

In her 1993 book, The Overworked American, Schor observes:

“One of capitalism’s most durable myths is that it has reduced human toil. This myth is typically defended by a comparison of the modern forty-hour week with its seventy- or eighty-hour counterpart in the nineteenth century. The implicit — but rarely articulated — assumption is that the eighty-hour standard has prevailed for centuries…”

“These images are backward projections of modern work patterns. And they are false. Before capitalism, most people did not work very long hours at all. The tempo of life was slow, even leisurely; the pace of work relaxed. Our ancestors may not have been rich, but they had an abundance of leisure. When capitalism raised their incomes, it also took away their time.”

Between Schor and Galbraith, we are forced to admit that one choice our society does not offer is the freedom to choose between a life filled with things or a life filled with free time.

In his 1974 book, Stone Age Economics, Sahlins offers some observations about working life among hunter-gatherers:

“In the total population of free-ranging Bushmen contacted by Lee, 61.3 per cent (152 of 248) were effective food producers; the remainder were too young or too old to contribute importantly…”

“In the particular camp under scrutiny, 65 per cent were ‘effectives’. Thus the ratio of food producers to the general population is actually 3 :5 or 2:3…”

“But, these 65 per cent of the people ‘worked 36 per cent of the time, and 35 per cent of the people did not work at all’!”

It is worth pointing out that hunter-gatherers enjoy a high quality of life, and, if able to survive early adolescence, stands a good chance of remaining healthy and active into their 70’s, with a free social support network to care for them as they age.

In a 2007 survey of longevity among living hunter-gatherer populations, researchers Gurvan & Kaplan found a life expectancy comparable to 18th Century Europe:

This is not the “solitary, poor, nasty, brutish, and short” existence proposed by Thomas Hobbes. This is more like the “Equal liability of all to labour” demanded by Marx and Engels, to go along with the “Combination of agriculture with manufacturing industries” we call “factory farms” and the “gradual abolition of the distinction between town and country” we call the suburbs.

Due to automation, today’s work week could be much shorter. According to the Bureau of Labor Statistics, productivity has more than doubled since 1965, though wages and the length of the work week have remained the same. There is an implicit, unexamined assumption that everybody wants more stuff instead of more leisure.

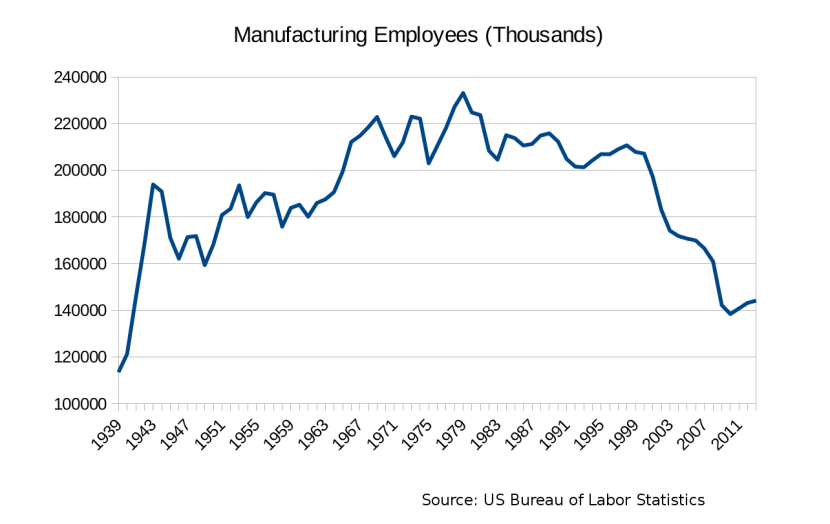

Consider the following graph, and keep in mind that one constant factor not reflected in the data is the 40-hour work week:

Another constant factor not reflected in the data above is compensation. According to the US Census, in 1965 median household income was $6,900. Adjusted for inflation, the 2011 value of this amount is $48,539. In 2011, median household income was $50,502. Over this period, incomes have increased 4%, while manufacturing output per worker has more than doubled. There is a very clear, though entirely implicit social value present here: it doesn’t matter how valuable our output is, you simply must work 40 hours per week.

The growth in productivity illustrated above equates not to growing incomes nor to fewer hours of toil, but to fewer employees. Technological growth does not create jobs, it destroys jobs.

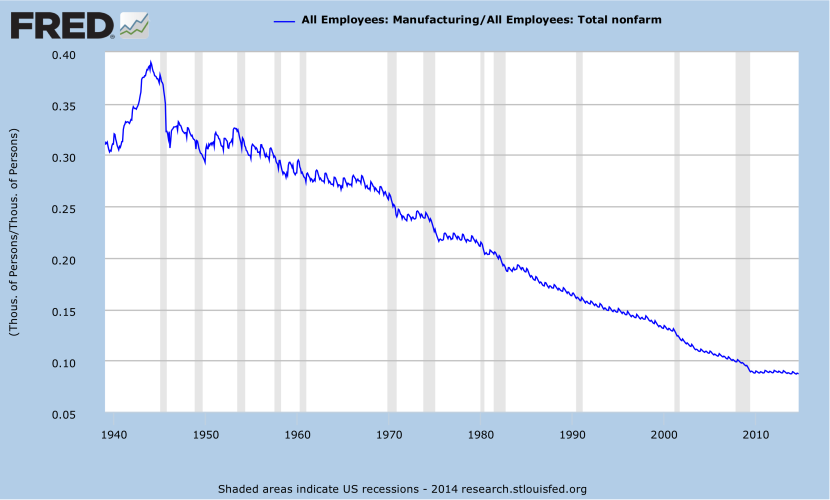

Consider this graph of manufacturing employment indexed to non-farm payrolls:

What the above chart helps shows is that, while increases in productivity have not led to higher pay or shorter hours, these increases have led to fewer manufacturing jobs available. Computerization in offices has had effects comparable to industrial automation.

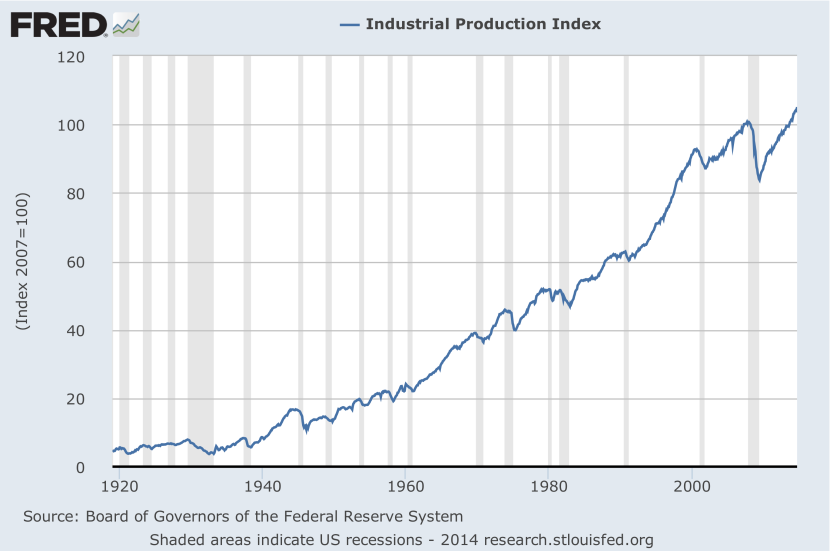

Furthermore, these changes have been overwhelmingly profitable for the owners of capital. While the above chart clearly shows that there are fewer manufacturing jobs in the United States than there once were, it is not at all the case that the United States “doesn’t make anything anymore.” The US makes a lot of stuff, and increases in productivity have led to clear increases in the raw value of manufacturing output:

As far as growth is concerned, most of us are getting the short end of the stick, as it were.

As far as growth is concerned, most of us are getting the short end of the stick, as it were.

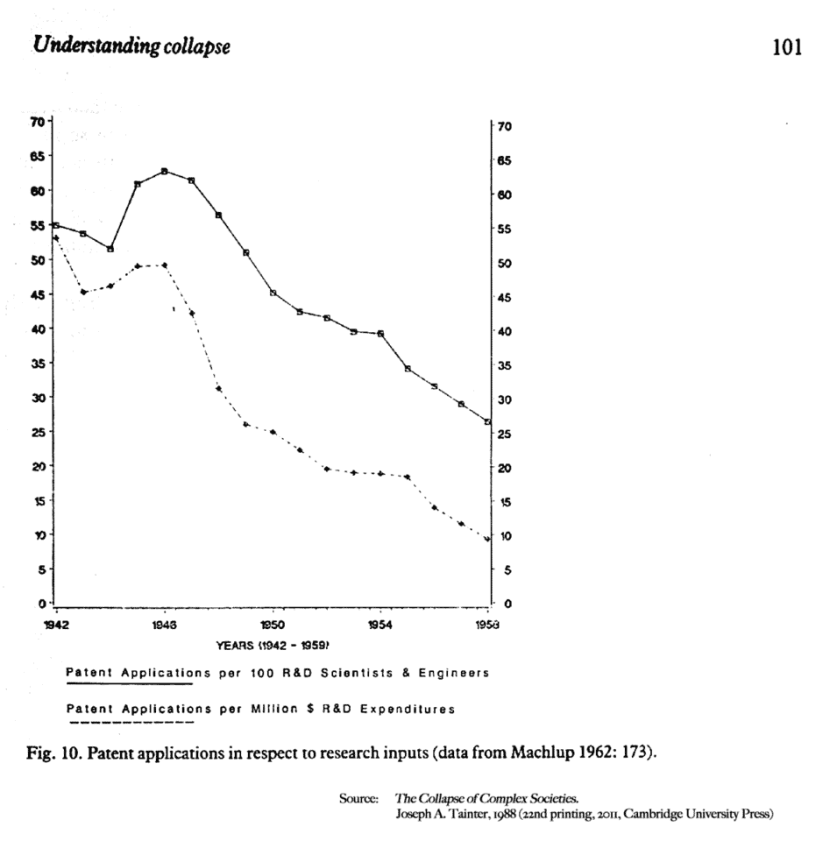

In terms of the economics of growth and the solution Lanier provides to the free reign of corporations and governments over the collection of personal information, archaeologist Joseph Tainter offers some useful insights. In Chapter 4 of The Collapse of Complex Societies (now in its 22nd printing from Cambridge University Press), Tainter discusses numerous archaeological examples of diminishing returns.

What Tainter finds is that when a society makes repeated investments in complexity, those investments frequently suffer from diminishing returns.

Tainter writes:

“Control and specialization are the very essence of a complex society. The reasons why investment in complexity yields a declining marginal return are : (a) increasing size of bureaucracies; (b) increasing specialization of bureaucracies; (c) the cumulative nature of organizational solutions; (d) increasing taxation; (e) increasing costs of legitimizing activities; and (f) increasing costs of internal control and external defense.”

An apparatus to monetize privacy would require additional bureaucracy, which would need to be funded and managed, essentially making the cost of such a solution prohibitive — unless that solution perhaps generates tax revenue by subsidizing the types of growth whose consequences need to be controlled. Put more simply: we don’t need privacy-invading technology, at all.

Measured quantitatively, these technologies do not improve our standard of life. Take medical technology, for example. The following chart illustrates increases in life expectancy indexed to investments in medical technology:

The above chart has profound implications: very few medical technologies in the past 150 years have had a substantive impact on standard of living and overall outcomes.

First, sanitation and hygiene: Ignaz Semmelweis proposed that doctors should wash their hands between procedures at Vienna General Hospital in 1847. Second, anesthetics and analgesics: the discovery of painkillers and general anesthetics allow for operations where patients might otherwise die of shock during surgery. Third, antibiotics: the basic science research that produced antibiotics was accomplished with about $20,000 worth of funding. Antibiotics are, in short, what makes a lot of modern anatomical knowledge practical. Fourth, the vaccine — which was made practical right about where the chart above takes a nose dive in 1954. That nose dive is called “the point of diminishing returns.”

Since 1954, most of modern medicine has been concerned with counter-acting the effects of industrial civilization, in terms of poor diet, sedentary lifestyle, environmental pollution, artificial toxins from synthetic materials, and the like. As such, “growth” in modern medicine is generally characterized by diminishing marginal utility.

The notion that growth deprives individuals of leisure and the time needed for intellectual improvement has long been recognized by laborers. In 1878, the Constitution of the Knights of Labor advocated:

“The reduction of the hours of labor to eight per day, so that laborers may have more time for social enjoyment and intellectual improvement, and be enabled to reap the advantages conferred by the labor-saving machinery which their brains have created.”

What is new in our era is that we no longer know how hard we actually work, and what little it really wins us.

And despite widespread acceptance of the notion that consumer culture, disposable goods, and gross materialism are problems, nobody is willing to question the growth imperative than enables it all.

Image Source: Disney/Pixar Studios, WALL-E

The only sustainable solution is to “defund” the system — to remove the incentive for growth, and the increasing marginal costs associated with growth. The “technological fix” is less technology, not new or “better” technology. To prevent the continual onslaught of new technology, it is necessary to remove the growth imperative.

The consequences of “planned obsolescence” are not the inevitable byproducts of technology: they are planned. Where technology is not pushed, but “naturally” produces labor savings, this benefit can be directed towards shortening the work week or increasing pay. “Progress” doesn’t have to mean creating unemployment. These are policy decisions and cultural values.

A solution to the problem of electronic privacy invasion — the the least, however — should not fund growth in the industries that profit from invading privacy.